AI pioneer Innoplexus and phytopharmaceutical company DrD are partnering toRead More

AI pioneer Innoplexus and phytopharmaceutical company DrD are partnering toRead More

Jun 24

Apr 24

Innoplexus wins Horizon Interactive Gold Award for Curia App

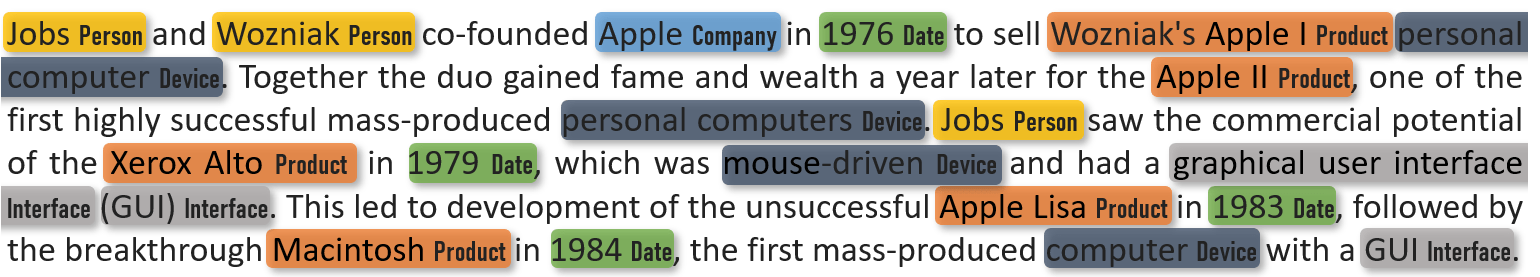

Read MoreNamed Entity Recognition (NER) is an information extraction method of a technology called Natural Language Processing (NLP). It locates entities in an unstructured or semi-structured text. These entities can be various things from a person to something very specific like a biomedical term. For example NER can recognize that “pancreatic cancer” is a disease and “5-FU” is an intervention. NER plays an important role in enabling machines to understand text. To properly understand NER we will first break down what NLP is. After that we will explain how NER works and highlight new developments in this field.

NLP enables machines to understand language and provides seamless interaction between humans and machines. Earliest signs of NLP can be traced to the 1950s, where Alan Turing proposed that one criterion to measure the intelligence of a machine is to judge its ability to have a “natural” conversation with a human. Since then, NLP has expanded to processing extremely large natural language data sets, with the goal to find new ways of understanding and creating natural language.

NLP can be broken down in three tasks:

Beside the understanding, extraction, and recognition of text and speech, there is a fourth process called Natural Language Generation (NLG). This process differs from the other ones. Most of NLP technologies focus on processing and not creating text and speech.

Now that we explained NLP, we can describe how Named Entity Recognition works. NER plays a major role in semantic part of NLP, which, extracts the meaning of words, sentences and their relationships. Basic NER processes structured and unstructured texts by identifying and locating entities. For example, instead of identifying “Steve” and “Jobs” as different entities, NER understands that “Steve Jobs” is a single entity. More developed NER processes can classify identified entities as well. In this case, NER not only identifies, but classifies “Steve Jobs” as a person. In the following we will describe the two most popular NER methods.

In the past NER strongly relied on a knowledge base. This knowledge base is called an ontology, which is a collection of data sets containing words, terms, and their interrelations. Depending on the level of detail of an Ontology the result of NER can be very broad or topic-specific. Wikipedia, for example, would need a very high level Ontology to capture and structure all their data. In contrast a life-science-specific company like Innoplexus would need a far more detailed ontology due to the complexity of biomedical terms. Ontology-based NER is a machine learning approach. It excels at recognizing known terms and concepts in unstructured or semi-structured texts, but it strongly relies on updates. Otherwise it can’t keep up with the ever-growing publicly available knowledge.

Deep Learning NER is much more precise than its predecessor as it is able to cluster words. This is due to a technique called word embedding, which is capable of understanding the semantic and syntactic relationship between words. Another competitive edge is NER’s feature of deep learning itself. Deep learning can recognize terms & concepts not present in Ontology because it is trained on the way various concepts used in the written life science language. It is able to learn automatically and analyzes topic-specific as well as high level words. This makes deep learning NER applicable for a variety of tasks. Researchers for example can use their time more efficiently as deep learning does most of the repetitive work. They can focus more on research. Currently, there are several deep learning methods for NER available. But due to competitiveness and recency of developments it is difficult to pinpoint the best one on the market. If you are interested in getting a deeper understanding of Deep Learning NER in the clinical field we recommend to read this article.

NER plays a key role by identifying and classifying entities in a text. It is the first step in enabling machines to understand what seems to be an unstructured sequence of words. Nevertheless, it is still a long journey to understand text like a human. One becomes particularly aware of this, when going into detail: As we found out NER understands “Steve Jobs” as a person. However, NER can’t differentiate between all the people called “Steve Jobs”. The next step is assigning a unique identity to an entity. This is done by linking and normalizing. Find out more about Entity Normalization in our upcoming blog post.

The cost of developing a new drug roughly doubles every nine years (inflation-adjusted) aka Eroom’s law. As the volume of data…

There was a time when science depended on manual efforts by scientists and researchers. Then, came an avalanche of data…

Collaboration with key opinion leaders and influencers becomes crucial at various stages of the drug development chain. When a pharmaceutical…

Data are not the new gold – but the ability to put them together in a relevant and analyzable way…

Artificial intelligence, or AI, is gaining more attention in the pharma space these days. At one time evoking images from…

Artificial intelligence (AI) is transforming the pharmaceutical industry with extraordinary innovations that are automating processes at every stage of drug…

There is a lot of buzz these days about how artificial intelligence (AI) is going to disrupt the pharmaceutical industry….

Drug discovery plays a key role in the pharma and biotech industries. Discovering unmet needs, pinpointing the target, identifying the…

The pharmaceutical industry spends billions on R&D each year. Clinical trials require tremendous amounts of effort, from identifying sites and…

Training algorithms to identify and extract Life Sciences-specific data The English dictionary is full of words and definitions that can be…

The early 1970s introduced the world to the idea of computer vision, a promising technology automating tasks that would otherwise…

Summary: AI could potentially speed drug discovery and save time in rejecting treatments that are unlikely to yield worthwhile resultsAI has…